This post details my experiences with Intel’s hardware encoding Quick Sync functionality that is part of their new processors. I’ve spent the past three days struggling with the Intel Media SDK to implement encoding of video streams to H.264 and wanted to (a) document the experience and (b) provide some information that I’ve pieced together from various places to anybody else who might be interested in playing around with Intel’s latest and greatest.

The Need

When writing computer vision applications that process fairly high-resolution imagery in real-time, significant amounts of CPU are used and lots of data is generated. For my application in surgical robotics, I have two stereo cameras running at 1024 x 768 @ 30 Hz = 135 M/s in full RGB video, in addition to any supplementary data generated by the system. This is a lot of data to process, but worse yet: what do you do with the data once you’ve processed it? Saving results isn’t very easy because your options are pretty much limited to the following:

- Save encoded video to disk, but this requires lots of CPU time which you are probably using for the vision system

- Resizing the video is an option, but it is uncool(TM) to save out lower resolution videos

- Save the raw video to disk, but you need a very fast disk or RAID array

- Save the raw video to RAM, but that gets expensive and requires a follow-up encoding phase

- Offshore the video to another computer/GPU, but this is tricky and can require a high-speed bus (i.e.e quad gigabit or an extra PCI-e)

- 3rd party solutions, such as hardware encoder + backup solution, but this is generally pretty pricy and finicky to setup

The Solution

Luckily for us, Intel has provided a brand new and almost free solution to this problem of saving lots of video data very fast: Quick Sync (wiki), a hardware encoder/decoder built directly into the latest SandyBridge x86 chips.What does this give you? The ability to encode 1080p to H.264 at 100 fps with only a negligible increase in CPU. I say almost free because while the functionality is free and built directly into the latest Intel processors ($320), there is some amount of work in getting the free Intel Media SDK working.

The rest of this post is going to detail my experiences over the past three days in getting Quick Sync to encode raw RGB frames (from OpenCV) to H.264 video.

Caveats/Limitations

First, it is important to know that there are some limitations with the hardware encoding:

- You need a processor that supports Intel Quick Sync and a H67 or Z68 motherboard (at the time of writing) to enable the integrated graphics section of the chip which contains the hardware encoders/encoders.

- The integrated graphics must be plugged into a monitor/display and be actively on and showing something (ebay a super cheap small LCD if you have to).

- Only a limited number of codecs are available: H.264, VC-1, and MPEG-2.

- Additionally, a maximum resolution of 1920×1200 is available for hardware encoding. I believe you can work around this issue by encoding multiple sessions simultaneously, although it looks like the code gets more complicated. I have tested running two applications that are both encoding at the same time without any difficulties so I know that works at least.

- Finally, documentation is somewhat scarce and the limited number of posts on the Intel Media SDK forum are probably your only bet for finding answers to any issues.

Setup

My current setup is an Intel i7-2600K processor (not overclocked) on a Z68 motherboard with an GTX 460 powering two monitors and a projector. I have Visual Studio 2010 Ultimate installed for C/C++ development. Originally I had the projector plugged into the integrated graphics, but it seems that didn’t work (i.e. QuickSync initialization would fail). I removed the graphics card and plugged in one of my monitors to the integrated graphics and QuickSync initialized fine, so I put the GTX 460 back in and plugged it to the other monitor and projector. Things were still happy with Quick Sync so I imagine that the integrated graphics must be not only plugged in, but actively displaying something.

To get started, Windows 7 x64 had already installed Intel’s HD Graphics drivers, so I just installed the Intel Media SDK 3.0. It comes with a reference manual, a bunch of sample in C++, and two approaches to using Quick Sync:

- Via the API: This is the official, supported way with the most amount of flexibility. Unfortunately, only elementary streams are supported, meaning the outputted files are literally the raw bitstream and cannot be played with VLC or other media players.

- Via DirectShow filters: For quick & dirty simple approaches, the SDK comes with some sample DirectShow filters for encoding/decoding. Requesting help on the forums regarding the DirectShow filters seems to always prompt a response along the lines of “well, our DirectShow filters are really just samples and aren’t really supported or well tested…” which doesn’t leave me with warm fuzzies.

The reason I’m mentioning these approaches is because when I first started out, I didn’t know any of this and just launched into approach #1 by looking at the sample_encode example provided with the SDK. Had I know the limitations of #1 and the possibility of #2, I would have probably tried going down the DirectShow route instead. I might look into #2 later, but #1 seems to be working fine for me at the moment.

Verifying Quick Sync Works

I discovered the simplest way to verify Quick Sync is working is to use GraphEdit to create a simple transcoding filter (no coding necessary). The process is described in this Intel blog post : simply download GraphEdit, then go to Graph -> Insert Filters. Select DirectShow Filters and insert “File Source (Async.)” (select a file playable with Windows Media Player) -> “AVI Splitter”, “AVI Decompressor” -> “Intel Media SDK H.264 Encoder”, “Intel Media SDK MP4 Muxer” and finally a “File Writer” (provide it with a name to save the file). Then you can run the graph and it should transcode the file for you. In my case, it converted a small ~1 minute XVID video to an H.264 video in just a few seconds. Coolnesses!

Adapting sample_encode

After reading some of the introductory material in the reference manual, browsing the sample code is usually how I best start learning something. The sample code that looked most promising was sample_encode. Unfortunately, the input to the program is a raw YUV video file (that then get shoved through the decoder and out to a *.h264 file), but who has those lying around? So I figured the best place to start was to connect that code to my program that outputs processed video frames for saving. The processing happens in OpenCV, so essentially I wanted to bridge the gap between a cv::Mat class with raw RGB pixels to the sample_encode Quick Sync encoding/saving code. First, I added a queue, mutex, and done pointer to CEncodingPipeline::Run and replaced the call to CSmplYUVReader::LoadNextFrame with a wait for next available frame in queue, a memcpy, and a check to the done pointer to see if the incoming video stream had stopped (in which case make sure to set sts = MFX_ERR_MORE_DATA). Then in the input thread, I used cv::cvtColor to convert the image to YUV (or YCrCb as referred to in the documentation) and packed it into the YV12 format (or rather the NV12 which is the same except different byte ordering). After numerous false starts, this finally spit out a video file that looked good, until I noticed something fairly subtle: the colors were a bit off.

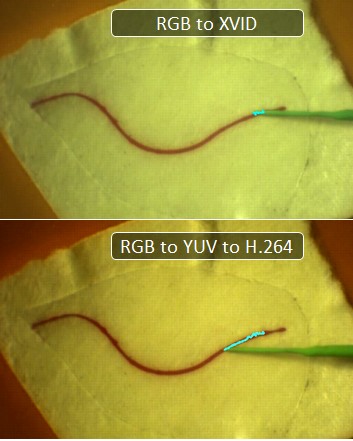

So apparently, among the million and one different ways to convert RGB to YUV, I got the wrong one. Well let’s go directly to the big guns: Quick Sync is an Intel product, so the Intel IPP routines should be the same right? I head over to the documentation for ippiRGBtoYUV and implement the algorithm from their docs. That works better, but the color is still slightly wrong and more saturated. So converting from RGB to YUV to feed into Quick Sync is a bust, although quite possibly there is a bug somewhere in my code.

RGB to H.264

OK, well, now what? If feeding YUV isn’t working, can we directly feed in RGB? The answer is sort of. Part of Quick Sync contains VPP or the hardware Video Pre-Processing pipeline. This is a set of filters, such as scaling, cropping, etc that you can run in hardware before the encoding step. One of the preprocessing steps is color conversion where we can convert RGB4 to NV12. RGB4 is really just RGBA where each pixel is represented by four bytes of red, green, blue, alpha. The trick is to get it to work. First, I convert OpenCV’s RGB to RGBA; that’s the easy part. In sample_encode, the trick to enabling their VPP code is to make sure that the input format vpp.In.FourCC is different than the output format vpp.Out.FourCC. So I set both in CEncodingPipeline::InitMfxVppParams:

m_mfxVppParams.vpp.In.FourCC = MFX_FOURCC_RGB4; // changed line

// in-between code, calculating frame rates, setting sizes and crops…

memcpy(&m_mfxVppParams.vpp.Out, &m_mfxVppParams.vpp.In, sizeof(mfxFrameInfo));

m_mfxVppParams.vpp.Out.FourCC = MFX_FOURCC_NV12; // added line

And boom, now I can memcpy my RGBA code into pSurf->Data.R and things magically work. You should set pSurf->Data.G =pSurf->Data.R+1 and pSurf->Data.B = pSurf->Data.R+2;

But what about AVIs?

So the one downside to this whole approach is that the API provided by the Intel Media SDK produce an elementary stream, not an MP4 or AVI. This is fine if you just want to get your video compressed and onto the disk, but somewhat disheartening when you want actually want to see that recorded video. There are, of course, video players that can decode elementary streams, including DGAVCDec and the Intel Media SDK’s sample_dshow_player. In fact, if you compile and run sample_dshow_player, it will actually use the DirectShow filters to not only load and play the elementary H.264 you’ve generated, but also transcode it to an AVI/MP4 file. Which is very nice, but still non-optimal. In the future, I’d like to check out the DirectShow route so I can automatically generate AVI files. Furthermore, it is possible even to mux in audio, which would be nice so I can record audio of the system instead of just video.

The End Result

So there we have it: video now comes in from the cameras, is processed by my vision system, sent to Quick Sync for encoding, and saved to disk. Encoding happens faster than I can shove frames in and barely increases my CPU load (there are some memcpy operations and various API calls to manage Quick Sync, and save the resulting stream to a file, but these are all relatively lightweight). I get an elementary H.264 stream out, which I will then batch convert using a modified version of the sample_dshow_player. It’s not completely optimal yet (I would prefer to output AVI/MP4 files), but for 3 days worth of futzing around with the Intel Media SDK, I think what I have works quite well for my needs.