Even though I was forced to get up unreasonably early for an advisor meeting, today went remarkably well. I discovered the deadline for BioRob 2012 had been pushed back from Jan 15th to Jan 31st, which gives me a huge breather. That greatly reduces (or at least postpones) the slew of all-nighters I would have to pull next week. My advisor meeting went well (i.e. I didn’t get yelled at :)) too. I spent the afternoon compiling OpenCV and PCL to try out the new KinectFusion. I was able to get the sample code for dense stereo working in OpenCV on the GPU which should be very handy. Finally, my coauthor and I got our journal paper down to 14 pages and are pretty much ready to submit. A few more proofs, final preparations, a few comments from friends willing to look at it, and bam we should be good to go! All in all, a very busy, yet rewarding day.

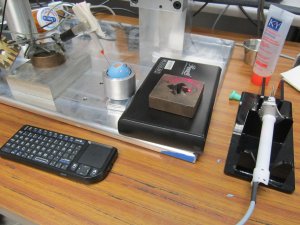

Today was “get back to the salt mines and get your shovel rusty” day. Our next set of experiments with our lab’s surgical robot Micron (white handled gizmo on the right in the black plexiglass holder) is designed for retinal procedures. To begin more realistic evaluation, I am trying to create a fake, or phantom, eye for which we can do tests. Luckily, we have these blue rubber eye-ball phantoms from JHU so mostly I just have to adapt the setup for our needs. Since retinal surgeries create little holes, or ports, in the side of the eye to stick tools through to get back to the retina on the back of the eye, I spent my day carving holes in blue rubber and trying to fit small 3 mm tubes to form a trocar. I mostly succeeded by dinner time and went to pick a friend up at the airport and eat at Cracker Barrel, which was nice. Upon returning, I discovered that our tool didn’t work very well at all, possibly because the rubber eye couldn’t rotate very well in my metal spherical holder. KY lubricant to the rescue! A dab in the bottom and bam, suddenly the eye could move around as easily as you looking right and left. Re-running a few preliminary tests showed some visual improvement with our surgical robot so I’m happy. Hopefully my advisor will be happy too at our insanely early meeting tomorrow. I called it quits early around midnight and headed home to write some of my thesis (and write this blog while I try to prevent myself from getting fat by riding an exercise bike). It’s all about multi-tasking – oh and not having a life 😀

Today was “get back to the salt mines and get your shovel rusty” day. Our next set of experiments with our lab’s surgical robot Micron (white handled gizmo on the right in the black plexiglass holder) is designed for retinal procedures. To begin more realistic evaluation, I am trying to create a fake, or phantom, eye for which we can do tests. Luckily, we have these blue rubber eye-ball phantoms from JHU so mostly I just have to adapt the setup for our needs. Since retinal surgeries create little holes, or ports, in the side of the eye to stick tools through to get back to the retina on the back of the eye, I spent my day carving holes in blue rubber and trying to fit small 3 mm tubes to form a trocar. I mostly succeeded by dinner time and went to pick a friend up at the airport and eat at Cracker Barrel, which was nice. Upon returning, I discovered that our tool didn’t work very well at all, possibly because the rubber eye couldn’t rotate very well in my metal spherical holder. KY lubricant to the rescue! A dab in the bottom and bam, suddenly the eye could move around as easily as you looking right and left. Re-running a few preliminary tests showed some visual improvement with our surgical robot so I’m happy. Hopefully my advisor will be happy too at our insanely early meeting tomorrow. I called it quits early around midnight and headed home to write some of my thesis (and write this blog while I try to prevent myself from getting fat by riding an exercise bike). It’s all about multi-tasking – oh and not having a life 😀

I went with a friend to Star of India on Craig because Wed. is chicken masala day only to discover they were closed with tarps covering the windows and doors! That is not good at all, and we were forced to Kohli’s for our Indian food cravings. It wasn’t too bad actually, but fresh nan is the best (after a while 0/0 starts getting stale). I also started my thesis party tonight. I got some papers reviewed: apparently everybody and their brother have tried to do 3D retinal reconstruction. Luckily, a lot of it seems kiwigambling very slow or only outputs depth maps rather than metric reconstructions. Also, my friends were shocked to hear that I put my papers into svn as v1, v2, …, v23 – but I think it’s being doubly safe with my version control. Oh and I wasn’t quite as done as I thought I was with this journal: today I got to proof my partner in crime’s recently written section and was able to shave off another quarter of a page so we are at 14.25 pages – almost there!

Well it’s back to the salt mines: this morning I departed from Orlando (left) and arrived at Pittsburgh (right). It is times like these that I wonder why I chose CMU. Then I remember the awesome robotics I get to do and I’m happy again. In other news, I finished up my part of the journal (14.5 pages) and handed it off to my partner in crime. Then I went on an airport and Steak ‘n’ Shake run. Oh and at T-112 days, I started my thesis!

Well it’s back to the salt mines: this morning I departed from Orlando (left) and arrived at Pittsburgh (right). It is times like these that I wonder why I chose CMU. Then I remember the awesome robotics I get to do and I’m happy again. In other news, I finished up my part of the journal (14.5 pages) and handed it off to my partner in crime. Then I went on an airport and Steak ‘n’ Shake run. Oh and at T-112 days, I started my thesis!

Ahhh!!! My journal paper with a 14 page limit was 16.5 pages this morning and after an entire day of chop chop chopping, I have reduced it by to 15 pages. One more page to go (somehow). In the mean time, I just packed to leave in 4 hours when I head to the airport and fly back to Pittsburgh. Fun fun!

So as the dawning new year coincides the 4.5 year anniversary of my entrance to the PhD program at the Robotics Institute at CMU, it is time to seriously think about dissertations, defenses (wait, singular, just one!), and graduation. I am hoping to graduate in the May 20th CMU commencement this spring, which is just in time for the world to end six or so months later. Hoooray! So I figured why not try to document this incredibly stressful time in my life (since of course, there is nothing busy people like better than more menial tasks).

Today I spent reviewing my journal paper and adding in some of my latest results. Alas I am now 3 pages over my 14 page limit. Tomorrow will be trimming (or probably chain-sawing is a more suitable verb ;)). Being that day traditionally associated with plans and resolves and similar such nonsense, I decided to break out my ill-used Google Calendar and make a schedule. My first scheduled event was to start my thesis when I get back to the frozen wasteland of Pittsburgh and my second action was to schedule a thesis writing party every day after supper – oh so much fun 😀 I tentatively set April 25th as my defense date since that would be exactly a year from my thesis proposal. w00t!

So the media is abuzz with the latest crisis: a 15-cent tax on Christmas trees to spend on advertising and promoting real Christmas trees. Oh the horror! But hold on a sec, where did this tax come from? Before we run wildly about accusing the government of nefarious designs, let’s do some digging. If you trace the history, you will find that the Christmas tree farmers themselves requested the tax!

Yes, as a guy in the Christmas tree biz (every Thanksgiving I don a lumbjack outfit and chainsaw, haul, bail, and sell trees on my grandparent’s Christmas tree farm in North Carolina), I am here to tell you that in 2008, the National Christmas Tree Association (an association representing the interests of 5000 growers in the US) petitioned the US Department of Agriculture (USDA) to impose a $0.15 tax on Christmas trees. (Those who are sharp should note this was during an entirely different administration, not that that has anything to do with anything).

But why would tree growers want this tax? Because sales for “lives trees” were declining due to the advertising efforts of artificial Christmas tree manufacturers, and the distributed nature of thousands of small tree growers meant they couldn’t effectively advertise their products. The same thing happened to milk, so what did they do? In 1983, the dairy farmers agreed to pay the USDA a small tax (via the Checkoff program) to advertise for them; hence, the “Got Milk” commercials were born. Ironically, this tax was…wait for it…yes, $0.15 per hundredweight of milk.

Seeing how this worked for milk and 17 other agriculture products, the Christmas tree farmers wanted a piece of the action. The new tax would be effective for 3 years, after which all growers who paid the tax could vote to either renew or dismantle it. After a several year study, during which comments were requested from individual tree growers and regional Christmas Tree Associations (such as the North Carolina one), 70% of growers and 90% of associations agreed with the idea. Thus, the USDA drafted a $0.15 tax to raise $2 million for advertising, which tree growers are hoping will increase demand and bolster a declining live Christmas tree market.

And now you know….the rest of the story (i.e., the one where bad journalists, sensationalist media outlets, opportunistic politicians, and ignorant Americans all muddle about yelling at each other). Now to be fair, I don’t think this tax would help Christmas tree farmers such as my family that much. We primarily focus on retail where we do our own advertising and networking. That seems to be working pretty well for us. I have heard family members worry about the economy and the reusability of artificial trees, though, so I don’t know for sure. From a consumer stand-point, the tax doesn’t seem to make a lot of sense. It is unlikely a monopoly is going to develop in the artificial tree business, so a decline in demand for real trees should just lowers prices for the consumer. The NCTA claims increased demand would offset the tax, meaning the effect on the consumer is negligible. Honestly, I’m not sure I believe that, but then again I’m no economist. However, the one thing I am sure of is that the government is not trying to kill Christmas or squeeze some more money into Uncle Sam’s pocket by grinching your holiday spirit. Also, if you want the thrill of choosing your own Christmas tree and having me chainsaw it down, haul it halfway up a mountain, bail it, and tie it to your car, come visit www.christmastrees4u.com. 🙂

This post details my experiences with Intel’s hardware encoding Quick Sync functionality that is part of their new processors. I’ve spent the past three days struggling with the Intel Media SDK to implement encoding of video streams to H.264 and wanted to (a) document the experience and (b) provide some information that I’ve pieced together from various places to anybody else who might be interested in playing around with Intel’s latest and greatest.

The Need

When writing computer vision applications that process fairly high-resolution imagery in real-time, significant amounts of CPU are used and lots of data is generated. For my application in surgical robotics, I have two stereo cameras running at 1024 x 768 @ 30 Hz = 135 M/s in full RGB video, in addition to any supplementary data generated by the system. This is a lot of data to process, but worse yet: what do you do with the data once you’ve processed it? Saving results isn’t very easy because your options are pretty much limited to the following:

- Save encoded video to disk, but this requires lots of CPU time which you are probably using for the vision system

- Resizing the video is an option, but it is uncool(TM) to save out lower resolution videos

- Save the raw video to disk, but you need a very fast disk or RAID array

- Save the raw video to RAM, but that gets expensive and requires a follow-up encoding phase

- Offshore the video to another computer/GPU, but this is tricky and can require a high-speed bus (i.e.e quad gigabit or an extra PCI-e)

- 3rd party solutions, such as hardware encoder + backup solution, but this is generally pretty pricy and finicky to setup

The Solution

Luckily for us, Intel has provided a brand new and almost free solution to this problem of saving lots of video data very fast: Quick Sync (wiki), a hardware encoder/decoder built directly into the latest SandyBridge x86 chips.What does this give you? The ability to encode 1080p to H.264 at 100 fps with only a negligible increase in CPU. I say almost free because while the functionality is free and built directly into the latest Intel processors ($320), there is some amount of work in getting the free Intel Media SDK working.

The rest of this post is going to detail my experiences over the past three days in getting Quick Sync to encode raw RGB frames (from OpenCV) to H.264 video.

Caveats/Limitations

First, it is important to know that there are some limitations with the hardware encoding:

- You need a processor that supports Intel Quick Sync and a H67 or Z68 motherboard (at the time of writing) to enable the integrated graphics section of the chip which contains the hardware encoders/encoders.

- The integrated graphics must be plugged into a monitor/display and be actively on and showing something (ebay a super cheap small LCD if you have to).

- Only a limited number of codecs are available: H.264, VC-1, and MPEG-2.

- Additionally, a maximum resolution of 1920×1200 is available for hardware encoding. I believe you can work around this issue by encoding multiple sessions simultaneously, although it looks like the code gets more complicated. I have tested running two applications that are both encoding at the same time without any difficulties so I know that works at least.

- Finally, documentation is somewhat scarce and the limited number of posts on the Intel Media SDK forum are probably your only bet for finding answers to any issues.

Setup

My current setup is an Intel i7-2600K processor (not overclocked) on a Z68 motherboard with an GTX 460 powering two monitors and a projector. I have Visual Studio 2010 Ultimate installed for C/C++ development. Originally I had the projector plugged into the integrated graphics, but it seems that didn’t work (i.e. QuickSync initialization would fail). I removed the graphics card and plugged in one of my monitors to the integrated graphics and QuickSync initialized fine, so I put the GTX 460 back in and plugged it to the other monitor and projector. Things were still happy with Quick Sync so I imagine that the integrated graphics must be not only plugged in, but actively displaying something.

To get started, Windows 7 x64 had already installed Intel’s HD Graphics drivers, so I just installed the Intel Media SDK 3.0. It comes with a reference manual, a bunch of sample in C++, and two approaches to using Quick Sync:

- Via the API: This is the official, supported way with the most amount of flexibility. Unfortunately, only elementary streams are supported, meaning the outputted files are literally the raw bitstream and cannot be played with VLC or other media players.

- Via DirectShow filters: For quick & dirty simple approaches, the SDK comes with some sample DirectShow filters for encoding/decoding. Requesting help on the forums regarding the DirectShow filters seems to always prompt a response along the lines of “well, our DirectShow filters are really just samples and aren’t really supported or well tested…” which doesn’t leave me with warm fuzzies.

The reason I’m mentioning these approaches is because when I first started out, I didn’t know any of this and just launched into approach #1 by looking at the sample_encode example provided with the SDK. Had I know the limitations of #1 and the possibility of #2, I would have probably tried going down the DirectShow route instead. I might look into #2 later, but #1 seems to be working fine for me at the moment.

Verifying Quick Sync Works

I discovered the simplest way to verify Quick Sync is working is to use GraphEdit to create a simple transcoding filter (no coding necessary). The process is described in this Intel blog post : simply download GraphEdit, then go to Graph -> Insert Filters. Select DirectShow Filters and insert “File Source (Async.)” (select a file playable with Windows Media Player) -> “AVI Splitter”, “AVI Decompressor” -> “Intel Media SDK H.264 Encoder”, “Intel Media SDK MP4 Muxer” and finally a “File Writer” (provide it with a name to save the file). Then you can run the graph and it should transcode the file for you. In my case, it converted a small ~1 minute XVID video to an H.264 video in just a few seconds. Coolnesses!

Adapting sample_encode

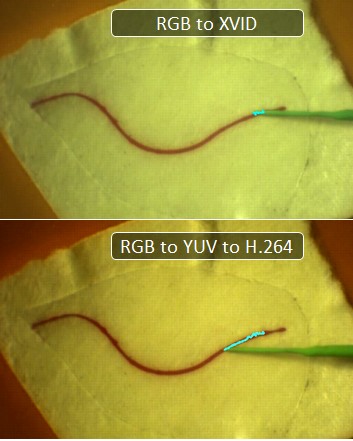

After reading some of the introductory material in the reference manual, browsing the sample code is usually how I best start learning something. The sample code that looked most promising was sample_encode. Unfortunately, the input to the program is a raw YUV video file (that then get shoved through the decoder and out to a *.h264 file), but who has those lying around? So I figured the best place to start was to connect that code to my program that outputs processed video frames for saving. The processing happens in OpenCV, so essentially I wanted to bridge the gap between a cv::Mat class with raw RGB pixels to the sample_encode Quick Sync encoding/saving code. First, I added a queue, mutex, and done pointer to CEncodingPipeline::Run and replaced the call to CSmplYUVReader::LoadNextFrame with a wait for next available frame in queue, a memcpy, and a check to the done pointer to see if the incoming video stream had stopped (in which case make sure to set sts = MFX_ERR_MORE_DATA). Then in the input thread, I used cv::cvtColor to convert the image to YUV (or YCrCb as referred to in the documentation) and packed it into the YV12 format (or rather the NV12 which is the same except different byte ordering). After numerous false starts, this finally spit out a video file that looked good, until I noticed something fairly subtle: the colors were a bit off.

So apparently, among the million and one different ways to convert RGB to YUV, I got the wrong one. Well let’s go directly to the big guns: Quick Sync is an Intel product, so the Intel IPP routines should be the same right? I head over to the documentation for ippiRGBtoYUV and implement the algorithm from their docs. That works better, but the color is still slightly wrong and more saturated. So converting from RGB to YUV to feed into Quick Sync is a bust, although quite possibly there is a bug somewhere in my code.

RGB to H.264

OK, well, now what? If feeding YUV isn’t working, can we directly feed in RGB? The answer is sort of. Part of Quick Sync contains VPP or the hardware Video Pre-Processing pipeline. This is a set of filters, such as scaling, cropping, etc that you can run in hardware before the encoding step. One of the preprocessing steps is color conversion where we can convert RGB4 to NV12. RGB4 is really just RGBA where each pixel is represented by four bytes of red, green, blue, alpha. The trick is to get it to work. First, I convert OpenCV’s RGB to RGBA; that’s the easy part. In sample_encode, the trick to enabling their VPP code is to make sure that the input format vpp.In.FourCC is different than the output format vpp.Out.FourCC. So I set both in CEncodingPipeline::InitMfxVppParams:

m_mfxVppParams.vpp.In.FourCC = MFX_FOURCC_RGB4; // changed line

// in-between code, calculating frame rates, setting sizes and crops…

memcpy(&m_mfxVppParams.vpp.Out, &m_mfxVppParams.vpp.In, sizeof(mfxFrameInfo));

m_mfxVppParams.vpp.Out.FourCC = MFX_FOURCC_NV12; // added line

And boom, now I can memcpy my RGBA code into pSurf->Data.R and things magically work. You should set pSurf->Data.G =pSurf->Data.R+1 and pSurf->Data.B = pSurf->Data.R+2;

But what about AVIs?

So the one downside to this whole approach is that the API provided by the Intel Media SDK produce an elementary stream, not an MP4 or AVI. This is fine if you just want to get your video compressed and onto the disk, but somewhat disheartening when you want actually want to see that recorded video. There are, of course, video players that can decode elementary streams, including DGAVCDec and the Intel Media SDK’s sample_dshow_player. In fact, if you compile and run sample_dshow_player, it will actually use the DirectShow filters to not only load and play the elementary H.264 you’ve generated, but also transcode it to an AVI/MP4 file. Which is very nice, but still non-optimal. In the future, I’d like to check out the DirectShow route so I can automatically generate AVI files. Furthermore, it is possible even to mux in audio, which would be nice so I can record audio of the system instead of just video.

The End Result

So there we have it: video now comes in from the cameras, is processed by my vision system, sent to Quick Sync for encoding, and saved to disk. Encoding happens faster than I can shove frames in and barely increases my CPU load (there are some memcpy operations and various API calls to manage Quick Sync, and save the resulting stream to a file, but these are all relatively lightweight). I get an elementary H.264 stream out, which I will then batch convert using a modified version of the sample_dshow_player. It’s not completely optimal yet (I would prefer to output AVI/MP4 files), but for 3 days worth of futzing around with the Intel Media SDK, I think what I have works quite well for my needs.

Again it is 3 am. Must be the perfect time to update my website. Or you know, actually sleep like a normal person. In any case, I just got back from the IROS robotics conference in San Fran, which was cool (both literally and figuratively). I got there a couple days early to see friends who had graduated and were now working in the Bay area. It was awesome to hang out and do stuff. Our “walking around SF” day turned into an 11 mile hike through the city, along the piers, through Fisherman’s Wharf, to Ghiradelli Square, across the lawns, up to the Golden Gate Bridge, across the bridge, and then back. Whew! Very exhausting.

IROS itself was both fun and boring in about equal measures. Maybe more on the boring side 😉 My presentations went well and CMU had two papers that were up for awards, which is always awesome. I saw some cool robots there, talked to people, and generally had a nice time. My iPod Touch came in handy when I ran into a talk that bored me; I’d just start reading my ebook until they said something interesting 😉

The weekend I got back was the PhD Movie (you know, from the makers of the PhD Comics), which honestly I was expecting to be a bad B movie with relatively weak jokes. However, it really surprised me. While definitely a B movie with relatively stiff acting, the laughs were really quite good. At least from my perspective as a grad student, they were spot on. “And the part of the week you look forward to most is the Friday gathering where you stand around drinking and talking to the same people you’ve been talking to all week” 🙂 It’s so true. Anyhow, I recommend a watch if you are (or know somebody) in grad school. I doubt it will become as iconic as the red stapler movie whose name currently eludes me, but it should be. And with that witty sentence, I shall take my leave to bed. Adieu!

Well here I am waiting at 3 am in the morning for a run to finish. So I figured I could write a post on my blog since it has been a while since I’ve updated this site. I could go to bed, but then I’d be wasting 6 hours of CPU time while I sleep. Methinks perhaps my priorities might not be too good. Oh the life of a grad student. What else mundane trivia can I add to the eye glazing, mind numbing tediousness that is the Internet? Let’s see, I bought a $17 spinache calzone the other day. The thing is huge; it’s gotta be a large pizza crust that they just rolled over to make into a calzone. It was pretty tasty and so far I’ve gotten 2 meals off of it with hopefully a third to come.

Is my run done? Bah, still 300 MB left to process….sigh…Oh I sprained my ankle playing racquetball, that was quite the ordeal. I went to CMU health services who gave me crutches and sent me to the hospital to get it x-rayed. Luckily it wasn’t broken, but in the day I spent using crutches I gained a lot more appreciation for people who have to use them. Dude those things are so much harder to use than they look. With the whole right foot being rather incapacitated africa casinos, I’ve been driving left-footed. It takes a bit of getting used to for the first couple of days but then it’s almost natural. A couple times I had to think which foot I was using. I’ve been taking CBD oil to relieve stress for work. It has worked miracles so far, even the pressure in my eyes are lower! Keep reading to learn about some of the key benefits that come with incorporating cbd for cats and supplements into a cat’s diet!

Ahaha, my run is done, starting a new one and off to bed!

Four years of grinding work in graduate school, done with classes, put out some conference papers, published a journal paper, and people keep asking when I’m going to be done. It must be that time in the PhD program to propose a thesis. Next Monday I’m giving my oral proposal, but I just mailed the thesis document to my thesis committee members and the Robotics Institute in general. The details are:

Vision-Based Control of a Handheld Micromanipulator for Robot-Assisted Retinal Surgery

Abstract – Surgeons increasingly need to perform complex operations on extremely small anatomy. Many promising new surgeries are effective, but difficult or impossible to perform because humans lack the extraordinary control required at sub-mm scales. Using micromanipulators, surgeons gain better positioning accuracy and additional dexterity as the instrument smoothes tremor and scales hand motions. While these aids are advantageous, they do not actively consider the goals or intentions of the operator and thus cannot provide context-specific behaviors, such as motion scaling around anatomical targets, prevention of unwanted contact with pre-defined tissue areas, and other helpful task-dependent actions.

This thesis explores the fusion of visual information with micromanipulator control and builds a framework of task-specific behaviors that respond synergistically with surgeon’s intentions and motions throughout surgical procedures. By exploiting real-time microscope view observations, a-priori knowledge of surgical procedures, and pre-operative data used by the surgeon while preparing for the surgery, we hypothesize that the micromanipulator can better understand the goals of a given procedure and deploy individualized aids in addition to tremor suppression to further help the surgeon. Specifically, we propose a vision-based control framework of modular virtual fixtures for handheld micromanipulator robots. Virtual fixtures include constraints such as “maintain tip position”, “avoid these areas”, “follow a trajectory”, and “keep an orientation” whose parameters are derived from visual information, either pre-operatively or in real-time, and are enforced by the control system. Combining individual modules allows for complex task-specific behaviors that monitor the surgeon’s actions relative to the anatomy and react appropriately to cooperatively accomplish the surgical procedure.

Particular focus is given to vitreoretinal surgery as a testbed for vision-based control because several new and promising surgical techniques in the eye depend on fine manipulations of delicate retinal structures. Preliminary experiments with Micron, the micromanipulator developed in our lab, demonstrate that vision-based control can improve accuracy and increase usability for difficult retinal operations, such as laser photocoagulation and vessel cannulation. An initial framework for virtual fixtures has been developed and shown to significantly reduce error in synthetic tests if the structure of the surgeon’s motions is known. Proposed work includes formalizing the virtual fixtures framework, incorporating elements from model predictive control, improving 3D vision imaging of retinal structures, and conducting experiments with an experienced retinal surgeon. Results from experiments with ex vivo and in vivo tissue for selected retinal surgical procedures will validate our approach.

Thesis Committee Members:

Cameron N. Riviere, Chair

George A. Kantor

George D. Stetten

Gregory D. Hager, Johns Hopkins University

A copy of the thesis proposal document is available at:

http://briancbecker.com/thesis/becker_proposal.pdf

Because my robot’s control system runs on a LabVIEW real-time machine, I have no recourse but to add new features in LabVIEW. Oh, I tried coding new stuff in C++ on another computer and streaming information via UDP over gigabit, but alas, additional latencies of just a few milliseconds are enough to make significant differences in performance when your control loop runs at 2 kHz. So I must code in LabVIEW as an inheritor of legacy code.

With a computer engineering background, I find that having to develop in LabVIEW fills me with dread. While I am sure LabVIEW is appropriate for something, I have yet to find it. Why is it so detestable? I thought you’d never ask.

If I get help from professional equipment engineers adelaide I might get a better result using the best quality materials.

- Wires! Everywhere! The paradigm of using wires instead of variables makes some sort of sense, except that for anything reasonably complex, you spend more time trying to arrange wires than you do actually coding. Worse, finding how data flows by tracing wires is tedious. Especially since you can’t click and highlight a wire to see where it goes – clicking only highlights the current line segment of the wire. And since most wires aren’t completely straight, you have to click through each line segment to trace a wire to the end. [edit: A commenter pointed out double clicking a wire highlights the entire wire, which helps with the tracing problem]

- Spatial Dependencies. In normal code, it doesn’t matter how far away your variables are. In fact, in C you must declare locals at the top of functions. In LabVIEW, you need to think ahead so that your data flows don’t look like a rat’s nest. Suddenly you need a variable from half a screen away? Sure you can wire it, but then that happens a few more times and BAM! suddenly your code is a mess of spaghetti.

- Verbosity of Mathematical Expressions. You thought low-level BLAS commands were annoying? Try LabVIEW. Matricies are a nightmare. Creating them, replacing elements, accessing elements, any sort of mathematical expression takes forever. One-liners in a reasonable language like MATLAB become a whole 800×800 pixel mess of blocks and wires.

- Inflexible Real-Estate. In normal text-based code, if you need to add another condition or another calculation or two somewhere, what do you do? That’s right, hit ENTER a few times and add your lines of code. In LabVIEW, if you need to add another calculation, you have to start hunting around for space to add it. If you don’t have space near the calculation, you can add it somewhere else but then suddenly you have wires going halfway across the screen and back joe fortune casino australia. So you need to program like it’s like the old-school days of BASIC where you label your lines 10, 20, 30 so you have space to go back and add 11 if you need another calculation. Can’t we all agree we left those days behind for a reason? [edit: A commenter has mentioned that holding Ctrl while drawing a box clears space]

- Unmanageable Scoping Blocks. You want to take something out of an if statement? That’s easy, just cut & paste. Oh wait no, if you do that, then all your wires disappear. I hope you remembered what they were all connected to. Now I’m not saying LabVIEW and the wire paradigm could actually handle this use case, but compare this to cut & paste of 3 lines of code from inside an if statement to outside. 3 seconds, if that compared to minutes of re-wiring.

- Unbearably Slow. Why is it when I bring up the search menu for Functions that LabVIEW 2010 will freeze for 5 seconds, then randomly shuffle around the windows, making me go back and hunt for the search box so I can search? I expect better on a quadcore machine with 8 gb of RAM. Likewise, compiles to the real-time target are 1-5 minute long operations. You say, “But C++ can take even longer” and this is true. However, C++ doesn’t make compiles blocking, so I can modify code or document code while it compiles. In LabVIEW, you get to sit there and stare at a modal progress bar.

- Breaks ALT-TAB. Unlike any other normal application, if you ALT-TAB to any window in LabVIEW, LabVIEW completely re-orders Windows Z-Buffer so that you can’t ALT-TAB back to the application you were just running. Instead, LabVIEW helpfully pushes all other LabVIEW windows to the foreground so if you have 5 subVIs open, you have to ALT-TAB 6 times just to get back to the other application you were at. This of course means that if you click on one LabVIEW window, LabVIEW will kindly bring all the other open LabVIEW windows to the foreground, even those on other monitors. This makes it a ponderous journey to swap between LabVIEW and any other open program because suddenly all 20 of your LabVIEW windows spring to life every time you click on.

- Limited Undo. Visual Studio has nearly unlimited undo. In fact, I once was able to undo nearly nearly 30 hours of work to see how the code evolved during a weekend. LabVIEW on the other hand, has incredibly poor undo handling. If a subVI runs at a high enough frequency, just displaying the front-panel is enough to cause misses in the real-time target. Why? I have no idea. Display should be much lower priority than something I set to ultra-high realtime priority, but alas LabVIEW will just totally slow down at mundane things like GUI updates. Thus, in order to test changes, subVIs that update at high frequencies must be closed prior to running any modifications. Of course, this erases the undo. So if you add in a modification, close the subVI, run it, discover it isn’t a good modification, you have to go back and remove it by hand. Or if you broke something, you have to go back and trace your modifications by hand.

- A Million Windows. Please, please, please for the love of my poor taskbar, can we not have each subVI open up two windows for the front/back panel? With 10 subVIs open, I can see maybe the first letter or two of each subVI. And I have no idea which one is the front panel and which is the back panel except by trial and error. The age of tabs was born, oh I don’t know, like 5-10 years ago? Can we get some tab love please?

- Local Variables. Sure you can create local variables inside a subVI, but these are horribly inefficient (copy by value) and the official documentation suggests you consider shift registers, which are variables associated with loops. So basically the suggested usage for local variables is to create a for loop that runs once, and then add shift registers to it. Really LabVIEW, really? That’s your advanced state-of-the-art programming?

- Copy & Paste . So you have a N x M matrix constant and want to import or export data. Unfortunately, copy and paste only works with single cells so have fun copying and pasting N*M individual numbers. Luckily if you want to export a matrix, you can copy the whole thing. So you copy the matrix, and go over to Excel and paste it in and……….suddenly you’ve got an image of the matrix. Tell me again how useful that is? Do you magically expect Excel to run OCR on your image of the matrix? Or how about this scenario: you’ve got a wire probed and it has 100+ elements. You’d like to get that data into MATLAB somehow to verify or visualize it. So you right click and do “Copy Data” and go back to MATLAB to paste it in. But there isn’t anything to paste! After 10 minutes of Googling and trial and error, it turns out that you have to right click and “Copy Data”, then open up a new VI, paste in the data, which shows up as a control, which you can then right-click and select “Export -> Export Data to Clipboard”. Seriously?!? And it doesn’t even work for complex representations, only the real part is copied! I think nearly every other program figured out how to copy and paste data in a reasonable manner, oh say, 15 years ago?

- Counter-Intuitive Parameters. Let’s say you want to modify the parameters to a subVI, i.e. add a new parameter. Easy right? Just go to the back panel with the code and tell it which variables you want passed in. Nope! You have to go to the front panel, right-click on the generic looking icon in the top right hand corner, and select Show Connector. Then you select one of those 6×6 pixel boxes (if you can click on one) and then the variable you want as a parameter. LabVIEW doesn’t exactly go out of its way to make common usage tasks easy to find.

Now granted, there are some nice things about LabVIEW. Automatic garbage collection [or rather the implicit instantiation and memory managing of the dataflow format, as one commenter pointed out], easy GUI elements for changing parameters and getting displays, and…………well I’m sure there are a few other things. But my point is, I am now reasonably proficient in LabVIEW basics and still have no idea how people manage to get things coded in LabVIEW without wanting to tear their hair out. There are people who love LabVIEW, and I wish I knew why, because then maybe I wouldn’t feel such horrorific frustration at having to develop in LabVIEW. I refuse to put it on my resume and will avoid any job that requires it. Coding in assembly language is more fun.

Thanks to Joydeep and Pras, Plinth now actually works under Mac & Linux (both x86 and x64). It turns out it was just some minor library and compilation issues that needed to be resolved. This means that that the helicopter visualizer now works on all three major platforms. Luckily, the MATLAB interface is cross-platform by using Java sockets. So go get some helicopter goodness.

Well I got a cold with the stuffed up nose, sore throat, and whole enchilada. It’s like it’s winter or something. Oh wait! It is winter. What was my first clue? Maybe the fact it is 7 F outside and there is this blindingly white stuff everywhere. On the plus side, this has made it much easier to prevent my phone from overheating. And yes, when acting as a 3G wifi hotspot and charging the battery, my phone will overheat and do an odd LED blinky dance of death, refusing the charge and generally acting really really slowly. That’s when I put it outside between the window and the screen in the nice Pittsburgh winter weather for a while to cool off. It works really well actually.

Anyhow, this has messed with my sleep schedule massively. I was in bed by 11 pm last night, but after an hour of not being able to sleep, I cracked open my laptop and figured I’d do work until sleep overwhelmed me. Alas that didn’t happen until 6 am so now things are all wonky. Maybe I’ll just invert my schedule. If I wake up at 4 pm, I can just drive my car to campus instead of having to take the bus. How handy would that be? Of course, finding food in the middle of the night in Pittsburgh can be a bit tough.

I have to review a couple of papers in the robotics field and was asking myself today: “What makes a good reviewer?” Let’s see. Ultimately, a reviewer serves two purposes: a judge and confidant. As a judge, a reviewer should objectively look at a body of research documented in a paper and check a number of important criteria:

- Does the research fit with the topic and tone of the place it is being published? Many research papers are good, but would be more appropriate if submitted to a different venue.

- Does the research meet the quality standards of the place it is being published? Is the research thorough and are the results representative of what you would expect?

- Does the paper present the research well? Is it clearly document the steps taken such that the results can be duplicated?

Of course, you could come up with many additional criteria by which to judge papers, and that is all well and good.

However, it is the second aspect to reviewing papers that I feel many people miss out on. A reviewer should be more than just an imperialist judge. In my opinion, what makes your ye-old standard reviewer into a good reviewer is the ability to act as a confidant. A confidant is somebody who you can share something important with and expect frank, but kindly advice. Your father, sibling, close friend. Somebody who will listen to you without condescension, frustration, or the ilk and really wish the best while advising you. Similarly, a researcher submitting their work for review is them sharing with you their not-yet published research. Just as a confident is somebody who is outside the situation and can offer advice, a reviewer should offer candid, yet kindly suggestions on how to make the research better. I have seen some reviewers who view their job as judge, jury, and executor in one, shredding good work and nitpicking small ideological issues. This is not to say that a reviewer shouldn’t be completely candid – sometimes the job of a confidant is to deliver unpleasant truth. They can note missing references, bring attention to errors, point out inconsistencies, indicate parts of the paper are unclear, make editorial corrections, etc. However, they should also offer insights that the authors might benefit from, suggest new avenues of future research, list more appropriate venues to publish, detail improvements that could be made, etc. And often it is not what you say, but how you say it.

The line between acting judge and confidant is hard to decide and act upon sometimes, but for the poor researcher slaving away at difficult problems for months and years on end, it is the least you can do. Put more than a quick scan and a few sentences into your reviews, and aim to be a good reviewer.

After making prison loaf (e.g. a nutritionally complete bread similar to meatloaf but made out of vegetables for prison inmates that really quite honestly tastes like nothing), we decided that perhaps maybe a better idea was to combine lots of different delicious desserty things. Somehow this morphed into dessert burritos. Yeah don’t look at me – I don’t from which bush that idea sprang behind from and tackled us, but it seemed like a pretty good idea at the time. Maybe it was the horrid taste of nothingness left behind from the insipid prison loaf that persists for months regardless of how often you try to scrub the memory from your mind. Or maybe not. Anyhow, thus began the trip to buy goodies and make brownies and prepare for something possibly great. Recipes for dessert burritos were a bit hard to come by (OK, I lie – I didn’t even check) so I just decided to wing it. Here is the recipe to our culinary masterpiece:

1) Start with one soft taco burrito thingie from the store.

2) Place on brownie square in the middle of the burrito.

3) Spread Nutella on the burrito. If you can somehow make it not look like a mud pie, do so.

3) Spread Nutella on the burrito. If you can somehow make it not look like a mud pie, do so.

4) Scoop one vanilla and one chocolate ice cream ball onto the burrito.

4) Scoop one vanilla and one chocolate ice cream ball onto the burrito.

5) Liberally sprinkle strawberries and blueberries or other fantastic fruit on your burrito.

5) Liberally sprinkle strawberries and blueberries or other fantastic fruit on your burrito.

6) No dessert is complete without drizzled chocolate syrup, so go hog wild.

6) No dessert is complete without drizzled chocolate syrup, so go hog wild.

7) Fold your burrito, making sure to fold the bottom in so it doesn’t leak too too much. Notice my ultra-classy paper plate.

7) Fold your burrito, making sure to fold the bottom in so it doesn’t leak too too much. Notice my ultra-classy paper plate.

8 ) Eat your dessert burrito! Please don’t look as scared as I am at the prospect.

8 ) Eat your dessert burrito! Please don’t look as scared as I am at the prospect.

So yeah there you have it. Dessert burritos. I’ve got to admit, the burrito was a bit saltier than I was expecting, so if you can procure non-salted ones, it would be in your best interest. I think I might just recommend a regular old sundae unless you are looking for something particularly unique.

Microsoft, always the butt of nerd jokes with Windows and blue screens, has introduced a new device that will revolutionize robotics: the Kinect. It is a depth-camera using structured light that gives full 3D representations of indoor environments within 2 to 10 feet along with a normal camera view. Originally designed to work with the Xbox, people quickly hacked it so you could feed the sensor information into a standard PC. Being in the Robotics Institute at CMU, my friends and I made a trip to Target a week after it was released and bought all the Kinects they had to do cool robotics activities. Apparently we weren’t the only people to have such grand ideas as already there are plenty of people doing really cool things with the Kinect.

My friends and I are currently working on automatic 3D world-building and localization for robots. If you slap a Kinect on a robot, can you use the depth + camera to figure out where you are, construct a 3D world, and colorize it with the cameras? The answer is of course yes, but it remains an open question how to best go about this. My guess is there will be a bunch of papers on this at the next robotics conferences such as ICRA or IROS. Personally, I think it would be really cool to be able to strap a Kinect on your head, run around indoors, build a map of your workplace, and then import it into Quake or another game so you can around killing people in your own custom map.

However, my real official research is with vision based control of a micromanipulator for surgery. So I do a lot of work with 3D reconstruction from stereo surgical microscopes. It would super amazing if the Kinect could be attached to the microscope and used to build a full 3D representation what the surgeon sees. On the OpenKinect driver forums, there were some musings that it might be possible, so I decided to investigate.

The first thing I tried was using the Kinect under a lab bench magnifying glass, and it worked quite well. Interestingly enough, increased magnification made things look farther away, so you could get objects closer to the Kinect and get 3D observations. This makes sense if you think about a magnifying glass as narrowing the spread of the IR projection. Another interesting point is that you do need both the IR projector and IR camera using the same magnification. This means you would need a stereo microscope. Luckily, our microscope is a surgical grade one with stereo. As a crude test, I positioned the IR projector that I got from https://www.buydlp.com/ and IR camera across the eyepieces of our Zeiss OPMI 1 microscope to see if I could get depth by looking down the microscope. Unfortunately, nothing showed up on the depth image so something wasn’t right.

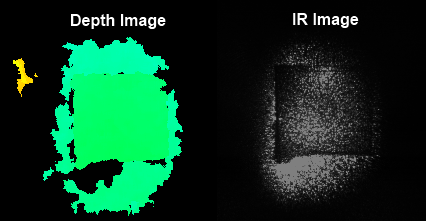

Using the night-vision mode on a Sony HandyCam, I was able to verify that the IR projection was showing up as a bright blurry dot on the target. My guess was that the focus was wrong, so I pulled up the ROS version of the libfreenect library where they had added the ability to get the raw IR camera data. Using the video feed from the IR camera, I was hoping I would be able to focus the dot pattern and get some depth information. Unfortunately, libfreenect on Linux had some issues where it detected bloom and reset the IR projection, which was super annoying. So after some hours of fighting with Linux vs. Windows USB driver code, I was able to port the ROS changes to the OpenKinect Windows drivers so I could view the depth map and IR camera in realtime.

Again, just by positioning the Kinect on the eyepieces of the microscope, I was able to get some interesting results. First, the crude positioning is very crude and resulted in poor alignment of the IR projection. However, at low magnifications, I was able to get some reconstruction of my rubber square (about an 1″ x 1″ x 0.1″) glued to a floppy disk. Unfortunately, higher magnifications become increasingly problematic because as the magnification increases, the perceived depth also increases – beyond the range of the Kinect. At 25X magnification, the depth was splotched dark blue and black, which is the color reserved for the furthest away depths and too far away (or no match). Another problem is illumination as specular reflections become very pronounced under a microscope. Anybody who has worked with wet or metallic items under high magnification and strong illumination can tell you that a specular reflection can completely swamp the scope. As you increase magnification, this becomes quite problematic. Because of these two problems, I was unable to get any good depth information from the highest magnification.

So what can we draw from this? For low magnifications, it seems possible to use the Kinect under a stereo microscope, although not great due to crude positioning. Higher magnifications are more problematic because of specular reflections blooming out the IR camera and the fact that higher magnification increases the perceived depth. Hopefully there are some ways around this. Perhaps some fancy positioning and/or filters might be able to reduce the specular reflections – however, I feel that would be very tricky. The depth problem is more difficult; in this case, it seems that perhaps hardware changes may be required or fancier optics. Hopefully somebody cleverer than myself is working on the problem and will come up with an awesome solution.

Sparse encoding and finding solutions of the form Ax=b with x subject to an l1 norm is a popular solution in signal reconstruction and now face recognition. Unlike the least-squares l2 norm which can be solved rapidly with SVD, finding the sparse l1 norm is a rather CPU expensive task. One of the fastest l1 solvers, GPSR still takes several seconds per image to process. If you profile the code, it turns out that 95% of the time is spent in matrix multiplication, which got me to thinking “there must be more efficient ways to do this.” I came up with three ways to optimize GPSR:

1) Single precision floating point math

Matlab by default uses double precision floating point math, so one quick and dirty solution is to convert everything to singles before calling GPSR. This gives a speed up of roughly 50%, which is fairly effective with a very negligible drop in accuracy (<0.5%) because of the lower precision.

2) Batch processing with matrices

The way I use GPSR is to find the sparse representation of one test face from a set of training faces. It is not unusual to have tens of thousands of training images, each with a size of 100-300 after PCA dimensionality reduction. Say we have 25,000 training images each with dimensionality of 100. Using GPSR, the bottleneck in finding the sparse representation of a test image involves multiplying a 25,000×100 matrix by a 100 vector many times until some convergence (I am of course leaving out some other things that happen for simplicities’ sake). By matrix multiplication properties, we can process multiple test images in batch by concatenating their vectors into a 100xN matrix. Although accomplishing the same thing, this single matrix multiply has the advantage of a single BLAS call where things can remain in L1 and L2 cache longer, giving us a much higher speedup. The problem here is some test images converge faster than others, but we can get around this by dynamically inserting and removing test image vectors into our concatenated “test images” matrix as needed. This yields about a 5X improvement with a batch size of 256, bringing us to about a 10X improvement over the original GPSR – for this particular application, of course.

3) GPU acceleration

A final step to optimizing the GPSR algorithm is to move to specialized hardware, such as graphic cards via a CUDA interface. Luckily, nVidia provides a CUDA implementation of BLAS called cuBLAS. How much speed ups could would we be likely to attain using this? Since matrix multiplication is still roughly 50% of my optimized GPSR algorithm, I took a look at matrix multiplication on various architectures.

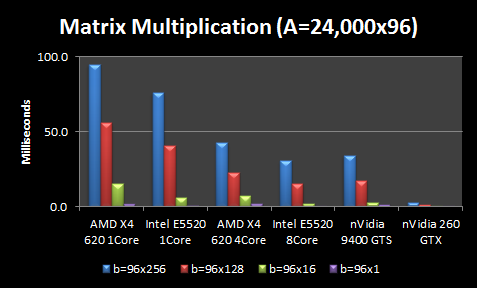

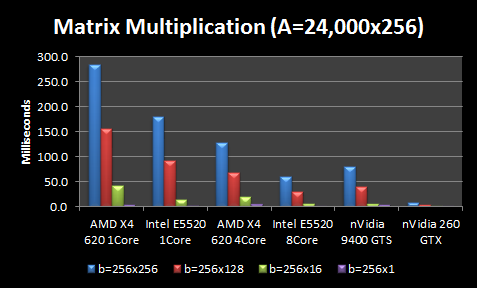

I conducted two test configurations with some simplifying assumptions. First, I assumed all matrix sizes are multiples of 16 because it seems cuBLAS seems to prefer this memory configuration. So I assume our training matrix A is 24,000×96 and test images are 96×1. I consider both without batching and with batches of test images with various sizes: 16, 128, and 256. Thus, I vary the test images matrix b from sizes ranging from 96×1 to 96×256.

All tests are averaged over 10-100 matrix multiplications with single precision matrices. I tested 4 CPU configurations and 2 GPU configurations:

- AMD X4 620 1Core ($100): Low-end 2.6 GHz Athlon quadcore system without L3 cache with only 1 core used

- AMD X4 620 2Core ($100): Low-end 2.6 GHz Athlon quadcore system without L3 cache with all 4 cores used

- Intel E5520 1 Core ($375): High-end 2.26 Xeon quadcore system with only 1 core used

- Intel E5520 2 Core($750): Two high-end 2.26 Xeon quadcores with all 8 cores used

- nVidia 9400 GT ($40): Low-end GPU with only 16 CUDA cores

- nVidia 260 GTX ($200): Middle-end GPU with 216 CUDA cores

NOTE: CPU configurations used MATLAB, which in turn usually uses CPU-optimized BLAS libraries like MKL on Intel or ACML on AMD. For a quick comparison, I benchmarked the the fast GotoBLAS against Matlab with the MKL and found GotoBLAS only 2% faster. Since this performance increase is fairly negligible, I didn’t pursue testing different BLAS packages.

NOTE: Memory transfer is not included in these comparisons because I can leave the large A matrix on the GPU unmodified as I process thousands of test images. Thus my problem is floating-point bound rather than memory bound.

I also consider the same scenario except the image size is 256 instead of 96 (so more PCA dimensions).

The CPU results are not terribly surprising. More expensive CPUs outperform less expensive CPUs, but the performance gains for more expensive CPUs are not proportional to the extra cost (i.e. 4X increase in CPU cost doesn’t give you anywhere close to a 4X performance boost). Throwing more cores at the problem helps, but again the scaling is nowhere near perfect. An extra 4 cores gives you ~2X boost and 8 cores gives you ~3X boost in performance. Larger matrices see better scaling. The interesting part is the GPUs. A $40 nVidia GPU is better than the $100 AMD CPU using all 4 cores, and performs about equivalently if you factor in the memory transfer to the GPU.

In fact, the $40 GPU is only beat by my $750 dual Intel CPUs with 8 cores, which is kind of astonishing. And the middle-end $200 nVidia GPU outperforms everything by a wide margin, averaging 5-30X better. However, at these high processing speeds, data transfer becomes the overhead. Thus, the Intel 8 Core still beats the nVidia 260 GTX if we factor in memory transfer of the large A matrix for every multiplication. However, I only have to transfer the large A matrix of ~25,000 training images to the GPU once. Thus for my application, it could still be tremendously faster to use the GPU.

Although it doesn’t show well on this graph, using matrix multiplication for b=96×1 is actually 1.5X slower than b=96×16 , leading me to believe that GPUs do have some interesting side-effects when it comes to matrix sizes, block optimizations, etc. It is good to know these intricacies because you could get significant speedups just by zero-padding matrices so the dimensions are divisible by 16.

I haven’t actually coded up the GPSR algorithm in CUDA simply because my existing 10X speedups seem to be working pretty well so far. And if I run GPSR on multiple datasets simultaneously, one on each core, I should be able to get much better parallelism than distributing each matrix multiplication over many cores. However, it is interesting to know that a $40 graphics card and some CUDA programming could outperform a brand new quad-core machine.

I hate them. No seriously. They are terrible. In fact, I’ll go further and say that dealing with video programatically through C++ has plagued me for almost a decade now. The scenario is this: I’ve recorded some video from one of my experiments and now I want to post-process it and overlay some visualizations on it, so I need a program to read it in. I was using OpenCV with Visual C++ 2008, which includes ffmpeg, but it crapped out on me with an nice error message:

Compiler did not align stack variables. Libavcodec has been miscompiled and may be very slow or crash. This is not a bug in libavcodec, but in the compiler. You may try recompiling using gcc >= 4.2. Do not report crashes to FFmpeg developers.

Yeah nice. Plus, OpenCV’s video support via ffmpeg doesn’t support 64-bit programs. I messed around with recompiling with different options and doing some quick web-searching, but didn’t find any easy solutions. So what to do? Well I could go directly to Microsoft’s solution of DirectShow via DirectX but that requires an insane amount of setup and often times the DirectX SDK doesn’t even compile cleanly with the latest versions of Visual Studio. I remembered I had solved this problem years ago in my time at Robotics Lab @ UCF by compiling all the DirectX stuff into a DLL so you could just include the header file and go. So I dug that up and compiled it just fine, but then it crashed because it was compiled with Visual C++ 2005 runtime DLLs. And of course you can’t mix & match. I wasn’t about to recompile from source because that meant setting up DirectX SDK.

So I had heard good things about ffmpeg and/or gstreamer as being generic video libraries with lots and lots of codes available. But unfortunately, ffmpeg developers are a big slobby about Visual C++ (with good reason since it’s not standards complaint), but it’s a super pain. So after a long arduous journey of like 5 hours, I finally arrived at using gstreamer’s Windows SDK to compile an ffmpeg sample that loaded a video and wrote out the first 5 frames to disk as ppms.

I’m thinking of creating a generic video server over sockets or shared memory so I never have to compile in the video reading/writing stuff every again (at least until the binaries break). I don’t know, is there a good Windows Video Library that I’m missing?

I’m sure unsigned int has had its place in the eons gone by; however, I think it’s outlived it’s useful lifespan. The number of times I’ve tried to do math with it and shot myself in the foot is quite astonishing. The root of the problem is you simply can’t do subtraction and expect sane results because it will underflow. Let’s take a look at an innoculous piece of code.

// Process all but the last element in the queue

for (int i = 0; i < queue.size()-1; i++)

All we want to do here is process all the items in a queue except the last one. And since we’ve declared our counter as int, everything should be fine, right? If there is nothing in the array, queue.size()-1 will be 0-1 which evaluates to -1 and will not satisfiy the loop condition.

However, this crashes. Why? Because I discovered queue.size() returns an unsigned int. And of course with unsigned int, only positive numbers are represented so when you do 0-1, the result will not be -1 but 4294967295. Brilliant, now my loop will execute 4 billion times when the queue is empty. Ahhh! Why would people code like this? If you need more than 2 billion items (the max size of a normal int), and use unsigned int instead, chances are the max size of 4 billion won’t be sufficient anyhow.

In conclusion, if I had to re-design C or C++, I would totally leave out unsigned int. Or at least re-define the underflow/overflow behavior so that 0-1 in unsigned int land will trigger an underflow and automatically reset the result to 0. Disabling the underflow/overflow behavior by default would also prevent your treasury from becoming -32768 gold if you gain too much money in Civilization II.

Often, I join Internet sites to find out information about people I know or am going to know. The reason I joined Facebook was to find out more information about my new (and at the time, unmet) roommates. Thus it stands to reason that when a girl (let’s call her Laura, possibly because that’s her name) started showing interest, I looked her up on match.com. Of course, they make it difficult to find anything if you don’t setup at least a basic a profile, so I figured why not? I’m single and while I don’t plan on getting romantically attached anytime soon (mostly because I live at school), I figured it wouldn’t hurt to keep my options open. I put up what I call a “Veridian Dynamics” profile (if you don’t know what that is, you must stop reading immediately and go watch the first episode of the show “Better Off Ted” because it’s amazing in a Dilbertesque type of way), which contains what I wish I could say if I didn’t have to worry about appearances. I threw up a few photos, including some joke ones where I was holding up an excised pig eye (hmm…now that I think about it, I’m not sure I want to meet anybody who thinks dead pig eyes are a turn on) and another one where I looked terrible because I had been up all night. You know, just to be completely honest, because I hear honesty is totally what online dating sites are all about – besides chicks totally dig hearing about a guy’s faults, right? No? Really? Oh bother, as Winnie the Pooh would say.

Anyhow, so I got to look at this girl’s profile which added to my creepiness factor (it doesn’t help that I professionally stalk people on Facebook). I left my profile up without any proofreading or anything as a free member and just a few days ago I got notification that I had an email from a GIRL! Gasp! Of course, my first mental image was of a sad, lonely, older tween on the heavier side desperately searching for a soulmate, which is sad. My second mental image is of two of my more prankster inclined friends hunched over a computer, filling out a fake account as a “girl” and then contacting me through the site to have some fun with me. I guess that shows how cynical I am because only did my fourth or fifth mental image feature a normal girl. Anyhow I log on and see that I have to become a subscriber (i.e. pay money) to actually see the email.

Thus began the several day debate with myself whether to pay the money. 47 people had viewed my profile and if I know anything about statistics with online dating, it’s that a hit rate of 1 out 47 people seems a little too good to be true. Reading online horror stories about Nigerian match.com scams and creepy old men didn’t make me feel much better either. But in the end I decided I’d spent money on worse things so I signed up for a few months, also in good stuff but mainly bad, the only great thing I got was a subscription to a cannabis dispensary, There is loads of fun to be had with https://highthc.ca/ you have to try it. Right after signing up it took me to a specials offer page with magazines such as Playboy, where I immediately performed an involuntary face-palm as a gut reaction to the “oh this is already so not panning out very well.” I also wanted to turn off auto-renew, which involved actually going all the way through the cancellation process, which is weird because it makes you think you are going to lose access when in reality it just cancels auto-renew.

OK, so time to read the email right? Nope! Being the geek that I am, I wanted to blog about the experience first! So that’s where I am now…now to Alt+Tab and see what I will find? Let me guess. Overweight desperate girl (40%) or prankster friends (30%) or people who recognize me on match.com (15%) or random dude who is inactive on the site (10%) or fake profile sent by employee to get me to pay (5%) or dream girl (<0.1%)? All part of the experience I suppose. Do I have a response for each one of these scenarios? Yup. Well actually not for the dream girl scenario. I have been working so hard to find the right partner, I even start a diet from https://askhealthnews.com. OK, now I’m just blogging to procrastinate actually reading the email. Bad Brian.

Alt + Tabbing….clicking 1 New Email….VIP Email? What nonsense is this! Just take me to the email already, I’ve just paid good money for what most likely will be nothing! Aww….19 years old? I’m leaning towards fake profile…clicking on profile…hmmm profile picture looking pretty young here, with the not quite classic, but (I imagine) common lying on my back in bed with arm thrown back pose. The grab-ya-attention blurb thingie (whatever it’s officially called) seems to align with my beliefs pretty well. That reduces the probability of a fake account at least. But it introduces a possibility I hadn’t thought of yet: the “much younger than me” girl. Usually people are complaining about the other way around. Ahh! Her age is 18? What happened to 19? I’m 24…what are girls thinking nowadays??? According to the US Center for Disease and Control, I have a life expectancy of another ~51 years and she has a life expectancy of ~62 years. Me being 6 years older now on average translates to me being dead for 10+ years of her life. I suppose that may be over-analyzing it too much….as one of my Canadian friends tells me, those are the worst years of your life anyhow. So I tell myself to keep reading….and oh man she’s a “I just graduated high school” kid. I’m going into my 8th year of college (4th as a PhD) and she’s going into her first year of undergrad?

[An hour later] Well that disturbed me enough to to have to go work off my anxiety, which resulted in some pacing around the apartment and trying to concentrate enough on playing some songs on my keyboard from my meager store of memory – it’s hard when my mind keeps doing math without my permission and telling me I’m a good 1/3 older than she is. Now that I’m completely traumatized and it’s 4 am in the morning, I’m going to go break open a box of mini-wheats, curl up in my bed, read a book and try to figure out how to respond to this girl, who seems quite nice otherwise. I suppose all in all, this is far from the worst I could have experienced and in fact I’m sure there are much worse things to come, but in the mean time, I will take refuge in geekiness and thoughts of creating a program that will crawl match.com and save a dataset that I can do interesting things with later. Maybe I’ll compile a list of the top 10 adjectives people use to describe themselves on dating sites. Maybe I should also try some CBD weed because I’ve just recently learned of how helpful it is in coping up with stress and anxiety. That means it’s going to be beneficial in my dating journey.

Actually, the OkCupid founders, who are math majors, made a blog named OkTrends that analyzes all things dating and it is super amazing! For instance, they got half a million users to rate their self-confidence and then plotted it by state. They concluded with “Generally speaking, the colder it is, the more likely you are to hate yourself.” Swell! And I just lost another hour to reading all sorts of random statistics (men lie on their height by an average of 2 inches, really short men and really tall women are 3 times less likely to get messaged, you are more likely to get a reply if you begin your message with “howdy” instead of “hey”, and probably most surprising, men get the most messages if they are not smiling and not looking at the camera for their profile pictures). And now it’s 6 AM and I think it’s time to go bed because it’s getting light outside. So there you have it: a night in the life of Brian.

Periodically somebody manages to graduate from the robotics institute and moves away, which is both a glad and sad time. This year it is Brina moving to Philly, which is close enough and an interesting enough place to da Pitts that we decided to road trip with her. So Friday night we loaded up her truck, which we kindly rented from the company www.teacrate.co.uk, slept for a few hours, and then went to Philly. On the way we stopped in Breezewood at the Bob Evans, where we saw a giant caterpillar. Brina was all like “oh my, you are the cutest thing I’ve EVAR seen!” and commented that it added to her dining experience.

So now we are ready to go for breakfast and move Brina’s whole life up three floors into here apartment, including her very heavy marimba instrument. Yay!

So now we are ready to go for breakfast and move Brina’s whole life up three floors into here apartment, including her very heavy marimba instrument. Yay!

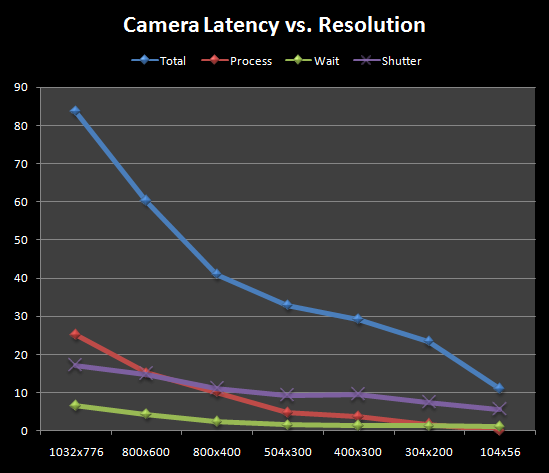

Say your baseball hitting robot is using cameras to track and hit a thrown ball. In this and other systems where computer vision is a sensor for time-critical processes such as a tight control loop, latency is very important. My project, Micron, runs a high-frequency control loop to achieve micromanipulations in surgical environments. It runs with the same principle of games from alot. Micron depends on cameras to know where important anatomy is, track the tool tip, and register the cameras to other high-bandwidth position sensors. This week I analyzed and tried to optimize camera performance.

My system has dual Flea2 PointGrey cameras that can capture at 800×600 in YUV422 @ 30Hz, which was then converted to RGB on the PC. In order to increase bandwidth, I first swapped to Format7 Raw8 mode. The custom Format7 mode has a number of advantage:

- Bayer. You get the raw values from the CCD in Bayer format. This is a more compact (and native) representation than RGB or a YUV encoding, allowing for more data to pass over Firewire to the PC. Also, fast de-Bayering algorithms exist in popular software like OpenCV’s cvtColor or in GPU. If you are playing Fortnite Hacken game on your computer check out this iGaming Software Provider, it will help make your games run smoother. You can specify a custom region of interest so you don’t have to transfer the entire frame. This allows you to choose the ideal point in the “frame size vs. frame rate” curve. If you only need part of the frame, you can increase how fast images are being transferred to the PC.

- Event notifications. Technically also available in other modes as well, event notifications allow you to process partial frames as they arrive. Each image consists of individual packets that are transferred sequentially over Firewire and the driver will let you know when each packet arrives. Theoretically, if your image processing algorithm operates on the image sequentially, you can transfer and process in tandem. For instance, at full 1032×776 resolution, it takes a Flea2 ~33 ms from the first image packet to the last image packet. Traditionally you can’t start processing the image until the last packet has arrived and the full image is available. However, if you are say doing color blob tracking where you need to test each pixel to see if it is some predefined color such as red, you can start as soon as parts of the image arrive. After 10% of the image arrives, you can image process that part while the second 10% is being transferred from the camera. This can theoretically significantly reduce overall latency because image transfer and image processing are no longer mutually exclusive.

I tried using all these tricks to reduce latency, although I wasn’t able to get event notifications properly. At first, it seems like a great idea. I was able to get up to 128 notifications per image, which is amazing! If my algorithm runs realtime, that means I could reduce my processing overhead to the time taken to process less than 1% of the image. However it seems they have a number of limitations and peculiarities that may be due to my poor programming or setup. A recently released game that is working with low latency is mu origin europe for Android, Find the best mod apk for this android game.

To test latency, I used the color detection and blob tracker code I run to find colored paint marks on my tool tip and applied to a blinking LED problem. At a random time, I turn a green LED on and I wait until my color blob detector sees the LED – the time between these two events is, by definition, the latency between when something happens and the cameras see it happening. The result is show below. Total is the time between turning the LED on and detecting it. Process time is the image processing time. Wait time is the average time a frame spends while waiting for the previous image to process. And shutter time is the average time between the LED turning on and the shutter for the next image capturing the LED.

So thanks to some urging by a fellow PhD student, I’ve uploaded a new version of my Facebook Downloader and swapped the site over to the new WordPress version. The old site is still there so old links work, which means eventually I’ll have to do individual redirects so that people following old links get the newest content. Oh well, something for another day.

Why are you so crazy, Linux? A friend asked me to look at why his installation of Matlab + code for the ImageNet 2010 competition on his VMWare Linux box wasn’t working. I logged on and typed in matlab, but got “matlab: command not found”, which was strange because he said he had installed it. Doing a “locate matlab” told me matlab was in /usr/bin/MATHWORKS_R2008B/bin so I executed

PATH=/usr/bin/MATHWORKS_R2008B/bin:$PATHexport PATHmatlab

That worked as I got a nice Matlab splashscreen, but then it just crashed printing out a cryptic “Opening log file: /home/usr/java.lgo.11195” message. That log file had the error “Could not reserve enough space for object heap”, which sounded suspiciously like not enough memory. Sure enough, only 384 MB of RAM were allocated to the VMWare virtual machine. Change that and reboot.

And of course by then, my local export PATH command was invalid so I added the following line to /etc/profile:

PATH=/usr/bin/MATHWORKS_R2008B/bin:/usr/bin/MATHWORKS_R2008B/bin/utils/mex; ^^^ DON'T DO THIS!!!

Reboot and I can’t even log in. Ctrl + Alt + F1 to get to command console and massive amounts of errors later I found out that bleck, I overwrote all the other PATH variables too. Now I have to /usr/bin/sudo /usr/bin/nano /etc/profile and change the line to include the old path as well.

PATH=$PATH:/usr/bin/MATHWORKS_R2008B/bin:/usr/bin/MATHWORKS_R2008B/bin/utils/mexexport PATH

Whew, now matlab starts up and we can execute mex. Except now matlab keeps whining about “cannot write to preference file “matlab.prf” in “/home/user/.matlab/R2008b/. A quick google search says we need to execute

sudo chown user /home/user/.matlab/R2008b/matlab.prfsudo chmod a+w /home/user/.matlab -R

That first one might be redundant, but there are several files matlab needs to edit and the first command only solves the first problem.

Running the make file resulted in “/usr/bin/ld: cannot find-lstdc++” which is interesting. Not entirely sure what was going on, I decided to do a sanity check and wrote a nice hello_world.cpp program and tried compiling it with g++ only to discover g++ wasn’t installed. Install g++, do another sudo ldconfig for good measure, and bam it all compiles nicely. However, running make from the feature directory whined about u_int32_t in item.hpp, so a quick “typedef unsigned int u_int32_t;” in item.hpp later, g++ is complaining about not being able to link to -lvl. Turns out their Makefile needed to be pointed to ./3rd-party/vlfeat/bin/glx and then things are happy.

Whew! Finally, we can run their example script: extract_bow.sh. Alas still no luck, all sorts of complaining going on. The first is “./vldsift: error while loading shared libraries: libvl.so: cannot open shared object file: No such file or directory” Bleck! Adding the directory that libvl.so is in to /etc/ld.so.conf seemed to work and the demo program ran.

Sigh…Linux, why are you so crazy? Or maybe the better question is: you guys who programmed Linux, why are you so crazy?